Do vision models perceive objects like toddlers ?

Despite recent advances in artificial vision systems, humans are still more data-efficient at learning strong visual representations. Psychophysical experiments suggest that toddlers develop fundamental visual properties between the ages of one and three, which affect their perceptual system for the rest of their life. They begin to recognize impoverished variants of daily objects, pay more attention to the shape of an object to categorize it, prefer objects in specific orientations and progressively generalize over the configural arrangement of objects' parts. This post examines whether these four visual properties also emerge in off-the-shelf machine learning (ML) vision models. We reproduce and complement previous studies by comparing toddlers and a large set of diverse pre-trained vision models for each visual property. This way, we unveil the interplay between these visual properties and highlight the main differences between ML models and toddlers. Code is available at (https://github. com/Aubret/BabyML).

Introduction

Motivation

State-of-the-art machine learning vision models learn visual representation by learning from millions/billions of independently and identically distributed diverse images. In comparison, toddlers almost always play with/observe the same objects in the same playground/home/daycare environments. They likely have not seen 10% of the different platypuses or sea snakes on ImageNet. In sum, toddlers likely experience a diversity of objects which is several orders of magnitude lower than current models. Unlike current machine learning (ML) vision models, they also do not have access to a massive amount of category labels (or aligned language utterances) or adversarial samples. Despite using different learning mechanisms, they develop strong semantic representations that are robust to image distortions, viewpoints, machine-adversarial samples and different styles (drawings, silhouettes…)

What perceptual mechanisms underpin such a robust visual system ? Psychophysics experiments demonstrate that toddlers progressively reach fundamental visual milestones and develop visual biases during their second and third year. Specifically, toddlers become able to extract the category of simplified objects

In this blog post, we investigate the extent to which off-the-shelf ML models also exhibit these properties. Comparing vision models specifically to toddlers (not adults

Scope of the study

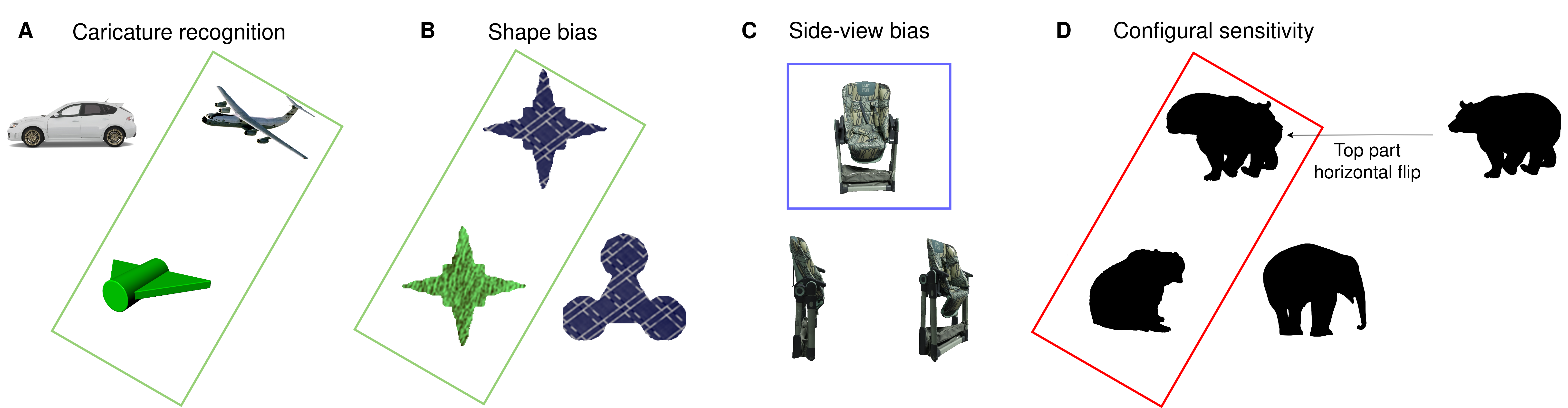

We divide our study into four parts, each corresponding to a specific visual property emerging in toddlers and remaining for the rest of their life. In the following, we describe each and clarify the scope of our experiments with respect to prior work. Note that we do not aim to faithfully reproduce evaluation protocols from developmental studies, as they are often based on language and we do not want to limit our experiments to vision-language models. Instead, we use alternatives that aim to assess the presence of a visual property relative to toddlers. Note that we still include vision-language models.

Caricature recognition

At around 18 months, toddlers become able to recognize impoverished variants of daily-life objects called caricatures. These caricatures are constructed from few simple shapes

Shape bias

When asked to match objects based on their kind (but also names etc.), toddlers group together novel objects that share the same shape, rather than objects that share the same color or texture

Side-view bias

From 18-24 months onward, toddlers learn to manipulate objects to see them 1- in upright positions; 2- with their main axis of elongation perpendicular to the line of sight, which we call side view (e.g. Figure 1, C)

Configural relation between parts

Starting at around 20 months, infants take into account the relative position of parts of an object to categorize it

TL;DR

Our analysis reveals two main visual milestones in machine learning models. The first one is the acquisition of the shape bias, which correlates with the ability to recognize normal objects and caricatures. This bias is close to that of toddlers in the strongest models. We also find that the shape bias is clearly connected to side views being better prototypes of object instances, i.e. they are relatively more similar to other views of an object. However, side-views are not prototypical enough to explain a side-view bias at the level of toddlers. The second one is the development of configural sensitivity, for which adults (and probably toddlers) level is out-of-reach for all investigated models. Neither of the two milestones are achieved by investigated models that were trained on a reasonable amount of visual egocentric data. In sum, comparing properties of toddlers object perception with those of artificial vision systems suggests that there is room for ML improvements in 1) making the development of the shape bias more data-efficient and 2) learning to generalize based on the configural arrangement of parts. For 1), one should not overlook CNN architectures, as we found them to show a more robust shape bias on novel shapes, compared to ViTs.

Experiments

Caricature recognition

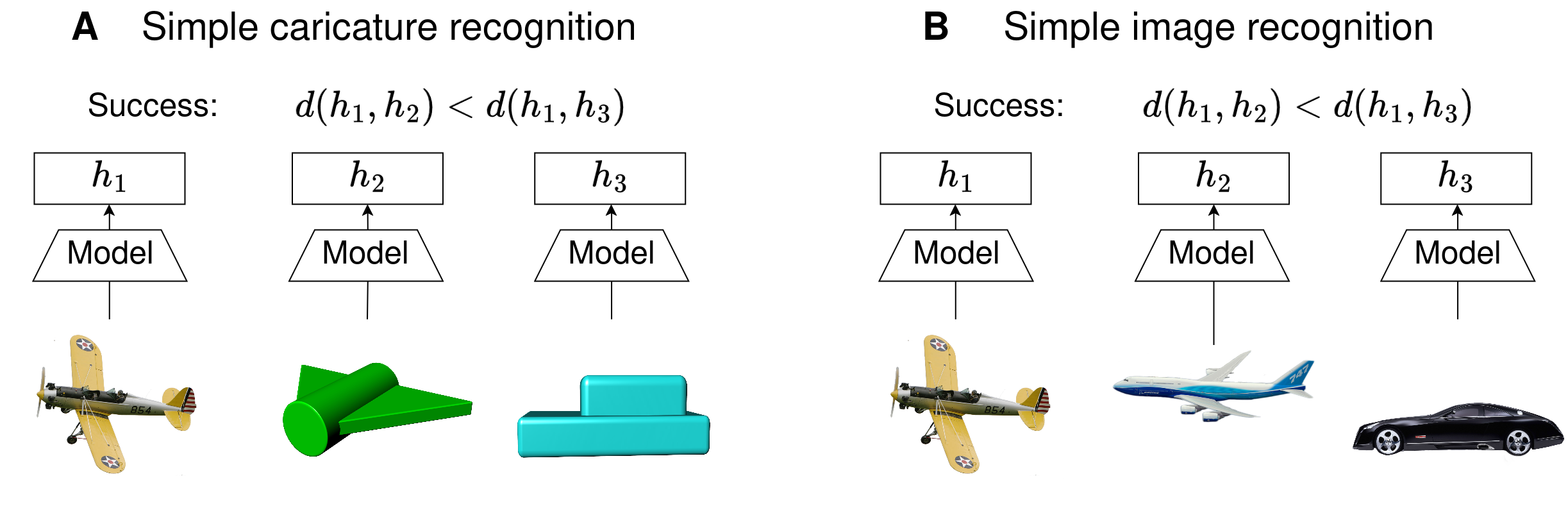

The main developmental protocol to evaluate caricature recognition is to ask a subject “where is the {object}” among a set of one object caricature matching the word and other non-matching object caricatures

Here, we modify their evaluation protocol to include models beyond vision-language models. We take the BabyVsModels dataset

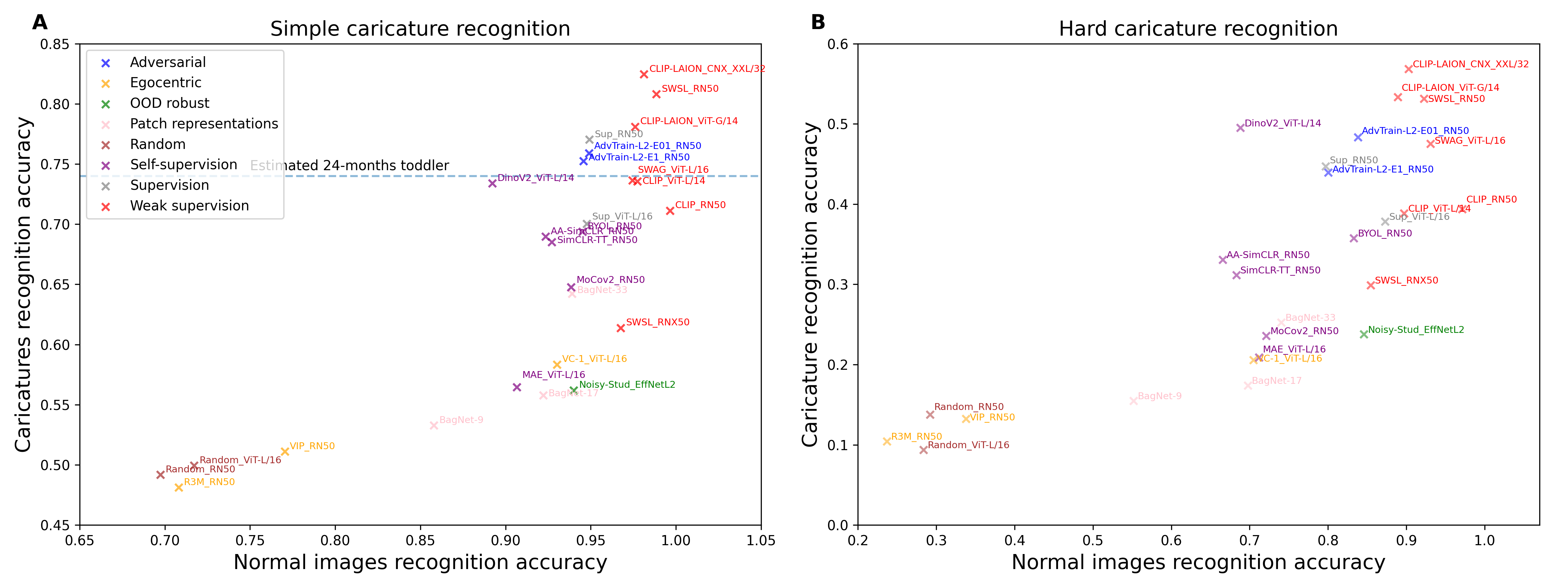

In Figure 3, A, we find that diverse models achieve a strong caricature recognition performance, at the estimated level of toddlers. Results confirm that supervised and vision-language models perform well on these tasks

Shape bias

It seems obvious that the best investigated models leverage objects’ shapes to measure image similarities, as texture and color cues are absent from caricatures. Here, we examine the shape bias of current models, compared to toddlers, and analyze the relationship between shape bias and caricature recognition.

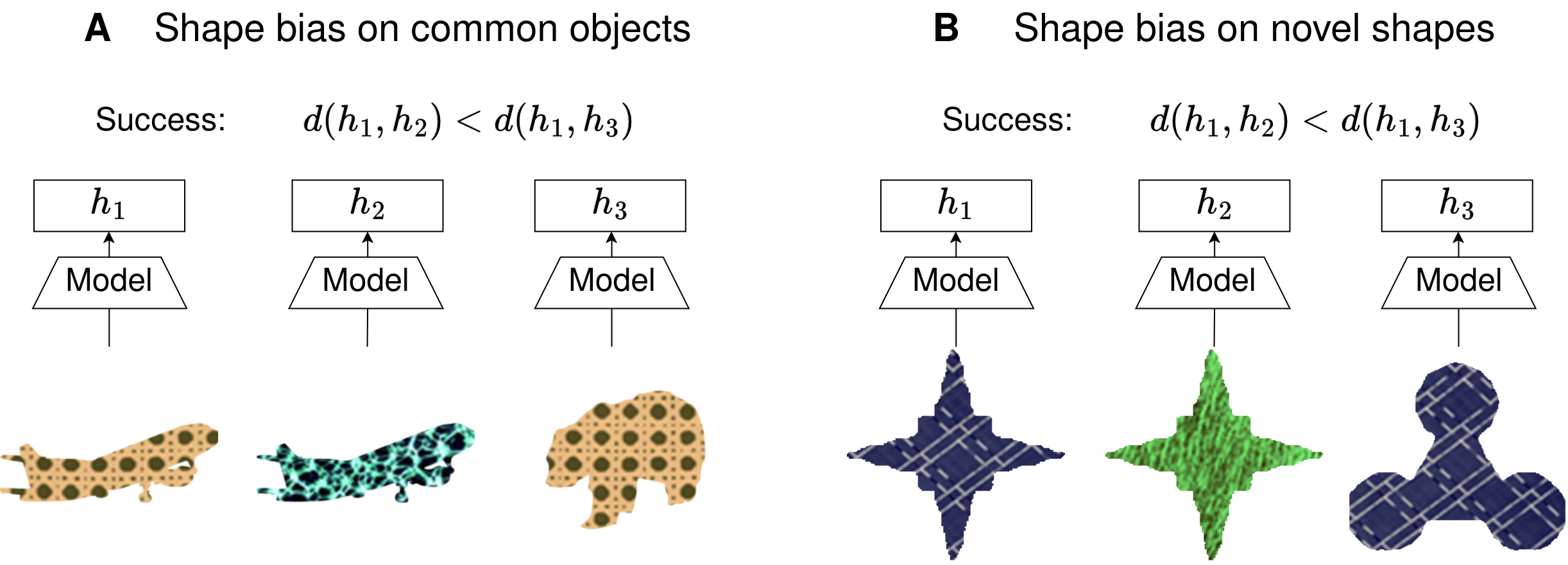

The cue-conflict protocol is the main experimental protocol to evaluate the shape bias in toddlers

ML benchmarks used to evaluate the shape bias of models

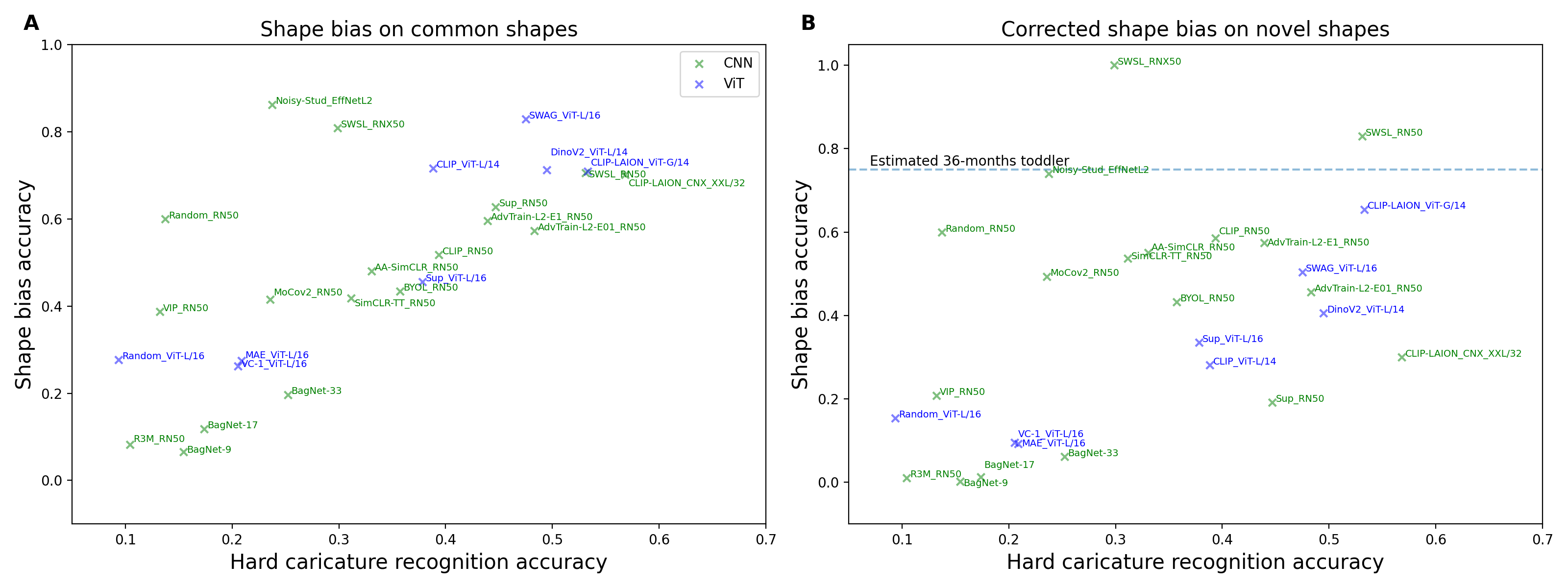

In Figure 5, A, we verify that there is a correlation between hard caricature recognition and the degree of shape bias over common objects (Pearson correlation: $0.68$). This is higher than the correlation with hard object recognition ($0. 56$). We also find that language is not a mandatory component of the shape bias as ID models seem to classify object based on shapes. In particular, DinoV2 shows a similar degree of shape bias as vision-language or supervised models. This is interesting because developmental studies clearly found that the productive vocabulary size is a strong predictor of the shape bias

In Tartaglini et al. (2022), the gap of shape bias between toddlers and models increases for novel shapes. When reproducing their results, we noticed that the performance of a random ResNet50 (Random_RN50, averaged over three random initializations) decreases between common and novel shapes. Since all shapes are novel for a random model, this suggests that the used novel shapes are inherently less prone to induce a shape-based matching than the common objects. To naively correct for this effect, we multiply all scores by the ratio \(\frac{\texttt{ShapeBiasCommon} (\texttt{Random_RN50})}{\texttt{ShapeBiasNovel}(\texttt{Random_RN50})}=1.334\) and show the results in Figure 5, B. We find that the strongest models perform close to toddlers’ performance; the best models, Noisy-Student and SWSL, match and outperforms toddlers performance, respectively. A thorough look at Figure 5, B seems to indicate that CNNs better generalize the shape bias to novel objects than ViTs (e.g. CLIP_RN50 versus CLIP_ViT-L/14), despite two outliers (Sup_RN50 and CLIP-LAION_CNX_XXL/32). This nuances previous claims of ViTs being more shape biased than CNNs

Side views

The previous section indicates that ML models reach a high degree of shape bias with common objects. Simultaneously to its emergence, toddlers also focus their attention on side views of objects

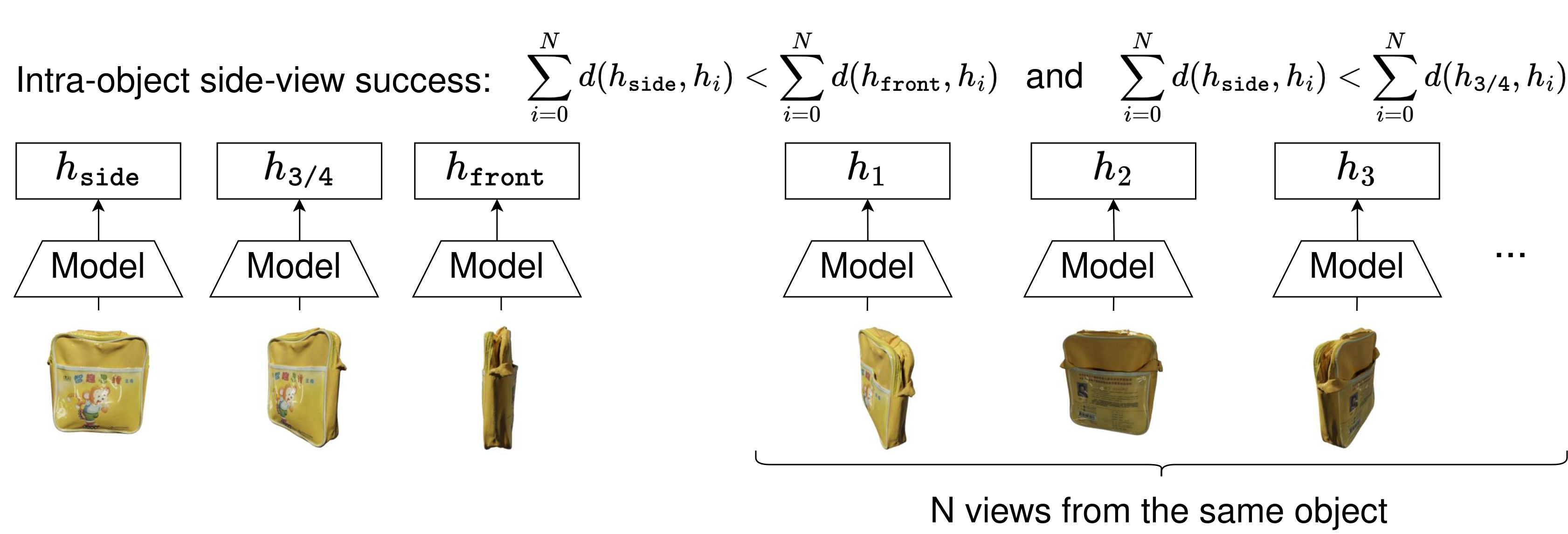

To investigate this question, we leverage the OmniObject3D dataset

To assess the specificity of each of these main views, we propose two metrics:

- the intra-object distance as the average distance between the representation of a given main view of an object and the representations of all other views of the same object;

- the intra-category distance as the average distance between the representation of the given main view of an object and the representations of all other views in the same category.

For each object, we retrieve the main view that maximizes and minimizes each metric. Finally, we compute the side-view accuracy as the average number of times the main side view maximizes these metrics. We similarly compute the 3/4-view and front-view accuracy. This allows us to assess which main orientation is more prototypical for an object or a category. Figure 6 illustrates the procedure.

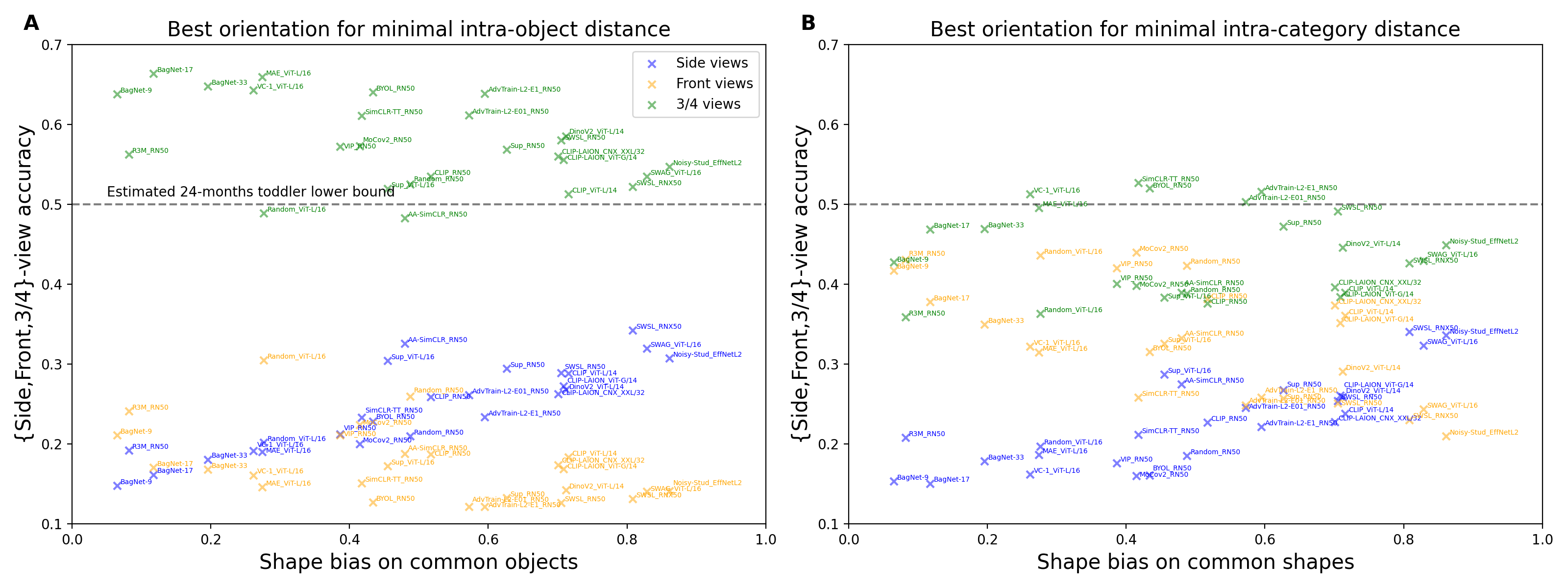

First, we compute the Pearson correlation between the intra-object side-view accuracy and our previously reported measures. We find that the metric that most highly correlates with the side-view accuracy is the shape bias on common objects (Pearson correlation: $0.86$). The correlation is similar for intra-category side-view accuracy (Pearson correlation: $0. 80$). Visualizing the results in Figure 7 confirms that the higher the shape bias, the more prototypical the side views are. The fact that the shape bias correlates with side views being more prototypical aligns with the fact that the shape bias emerges during the same period as the side-view bias in toddlers. This correlation supports the hypothesis that toddlers may turn objects towards side views because they are more prototypical.

Interestingly, AA-SimCLR

We did not find raw data on the time spent by toddlers on side views. However, current studies found (on a different set of objects) that toddlers focus more on these views than on 3/4 views

Configural relation between parts

Paying attention to the shape of an object is different from looking at the relative position of all parts. It could be that the model only extracts local

The ability to categorize objects based on the configural arrangement of their parts starts its development in toddlers

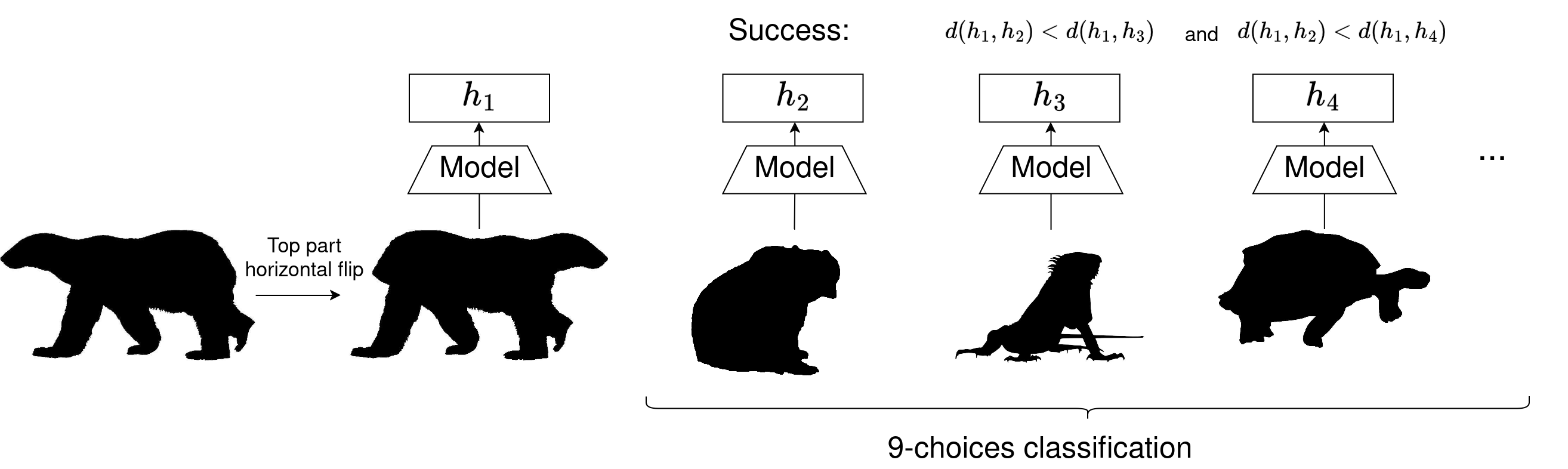

In

In

Thus, we take the set of stimuli from Baker et al. (2022), which comprises 9 categories of animals, each containing 40 original and Frankenstein silhouettes. To reproduce experiments from Baker et al. (2022) without a classifier, we sample a normal silhouette from each category and one Frankenstein silhouette. We compute the cosine similarity between the Frankenstein silhouette and all normal silhouettes and define the accuracy as how often the cosine similarity between the Frankenstein and the category-matching normal silhouette is the largest (Frankenstein test). To assess the relative performance, we apply the procedure again after replacing the Frankenstein silhouette by another normal silhouette (normal test). Finally, we compute the configural sensitivity as the difference in performance between the success rate of the Frankenstein test and the success rate of the normal test. To show sensitivity to the spatial arrangement of parts, the metric must be lower than $0$.

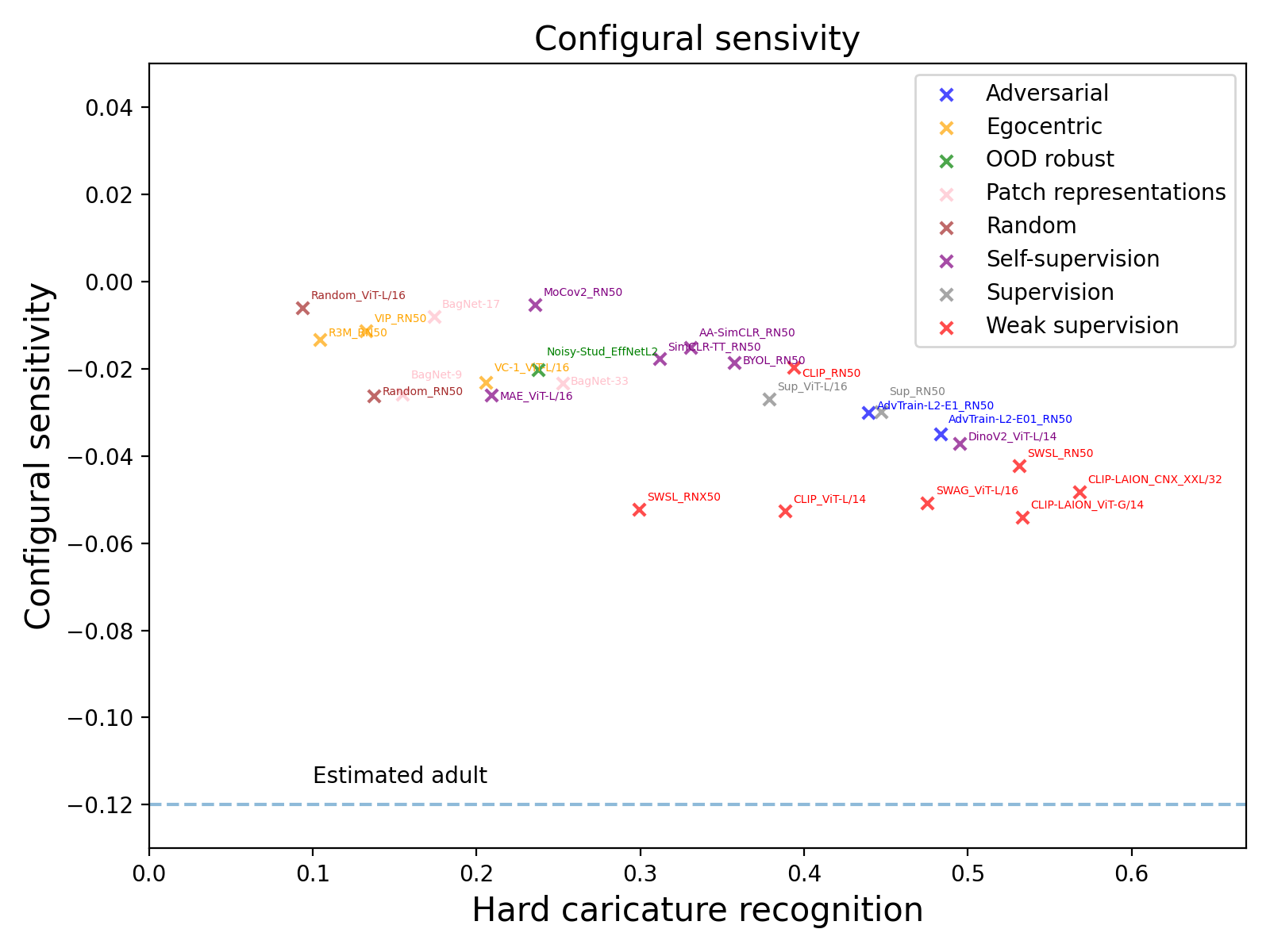

We first compute the Pearson correlation score between configural sensitivity and hard image recognition ($-0.58$), hard caricature recognition ($-0.71$) shape bias on common objects ($-0.69$) and shape bias on novel objects ($-0.41$). Given that hard caricature recognition is the most highly correlated metric, we plot configural sensitivity against hard caricature recognition in Figure 10. We observe that the performance of current models is very weak, barely lower than $0$. Only large-scale weakly supervised models are significantly more sensitive than a BagNet-9, a model that cannot show configural sensitivity by design. All models are less sensitive than the estimated adults. We conclude that even shape-biased models likely rely on local shape cues instead of the global arrangement of parts.

Conclusion

In this blog post, we have extended previous studies to investigate whether current ML models learn 4 fundamental visual properties that emerge in toddlers. While we did not observe major effects of the training setup (supervised, adversarial, self-supervised…), we found that cutting-edge models reach the estimated level of a toddler with respect to caricature recognition and the shape bias on common objects. For the shape bias on novel objects, the best models are close to toddlers. However, most of the considered models use bio-implausible data: a lot more diverse images, labels, millions of aligned language utterances, adversarial samples, etc… Models trained with egocentric visual images comparable to humans (VC-1, VIP, R3M) perform poorly on all benchmarks. In addition, other ML models still perform poorly on configural sensitivity and the proposed side-view bias compared to toddlers and adults, suggesting there is room for improvements. We cannot conclude on the performance of toddlers for configural sensitivity for the considered task. However, given the emerging configural sensitivity of toddlers

We found that object recognition abilities positively correlate with caricature recognition, which also positively correlates with a strong shape bias. This suggests that these properties are connected in ML models. However, it does not mean that increasing the shape bias systematically leads to better recognition abilities, as evidenced by the Noisy-Student model (high shape bias, relatively low caricature recognition) and prior works

From a developmental perspective, prior work found that productive vocabulary size is a strong predictor of the shape bias in toddlers

The origin and consequence of the side-view bias for toddlers is mostly unclear in the developmental literature. It may emerge because these views are very stable for small rotations

This study presents several limitations. First, the experimental protocol does not perfectly follow either developmental experiments (often based on language) or machine learning protocols (often based on vision-language models or supervised labels). We chose not to rely on language to test a broad set of models beyond vision-language models, and we decided to not use supervised heads as it is unclear how it relates to toddlers’ visual representations. Thus, we assume that, during their task, toddlers perform a form of cognitive comparison that resembles our comparison of representations. Second, the set of stimuli generally varies between developmental studies and ours, allowing to only approximate how a toddler would perform. This is especially salient in our study of configural sensitivity: to the best of our knowledge, toddlers have not been tested on Frankenstein silhouettes. Third, the set of stimuli is small and often biased (very small for caricatures, only animals for configural sensitivity, white background in almost all datasets…). Despite that, our study provides a global picture of the presence and interplay of toddlers’ visual properties in ML models. We hope it will spur research aimed at addressing the gap between models and toddlers.

Acknowledgments

This work was funded by the Deutsche Forschungsgemeinschaft (DFG project Abstract REpresentations in Neural Architectures (ARENA)), as well as the projects The Adaptive Mind and The Third Wave of Artificial Intelligence funded by the Excellence Program of the Hessian Ministry of Higher Education, Science, Research and Art (HMWK). JT was supported by the Johanna Quandt foundation. We gratefully acknowledge support from the Goethe-University (NHR Center NHR@SW) and Marburg University (MaRC3a) for providing computing and data-processing resources needed for this work.

Models

To reproduce and strengthen the claims reported papers and this blogpost, we test a very diverse set of pre-trained models, including those addressing specific shortcomings of ML models. We use a mix of convolutional architectures (CNN) and vision transformers (ViT).

As most of previous experiments, we assess standard models based on random initializations and supervised ImageNet training for both ResNet50 and ViT-L. We also consider two different adversarially trained supervised models

On the weakly supervised side, we consider a strong ViT-L/16 trained on a large-scale dataset of hashtags (SWAG)

We further add two strong methods for out-of-distribution image classification

We include state-of-the-art self-supervised instance discrimination (ID) methods trained on ImageNet-1K, namely MoCoV2

Finally, we evaluate three vision models trained on robotic and egocentric data, namely VIP